Attention-guided Low-light Image Enhancement

Abstract

Low-light image enhancement is a challenging task as multiple factors, including color, brightness, contrast, artifacts, and noise, etc. need to be simultaneously and effectively handled. To address such a complex problem containing multiple issues, this paper proposes a novel attention-guided enhancement solution based on which an end-to-end multi-branch CNN is built. The key of our method is the computation of two attention maps to guide the exposure enhancement and denoising tasks respectively. In particular, the first attention map distinguishes underexposed regions from well lit regions, while the second attention map distinguishes noises from real textures. Under their guidance, the proposed multi-branch enhancement network can work in an input adaptive way. Other contributions of this paper include a decomposition-and-fusion design of the enhancement network and the reinforcement-net for further contrast enhancement. In addition, we have proposed a large dataset for low-light enhancement. We evaluate the proposed method with extensive experiments, and the results demonstrate that our solution outperforms state-of-the-art methods by a large margin both quantitatively and visually. We additionally show that our method is flexible and effective for other image processing tasks.

Network Architecture

In this paper, we propose a fully convolutional network containing four subnets: an Attention-Net, a Noise-Net, an Enhancement-Net and a Reinforce-Net. The Attention-Net is designed for estimating the illumination to guide the method to pay more attention to the underexposed areas in enhancement. Similarly, the Noise-Net is designed to guide the denoising process. Under their guidance, the multi-branch Enhancement-Net can perform enhancing and denoising simultaneously. The Reinforce-Net is designed for contrast re-enhancement to solve the low-contrast limitation caused by regression.

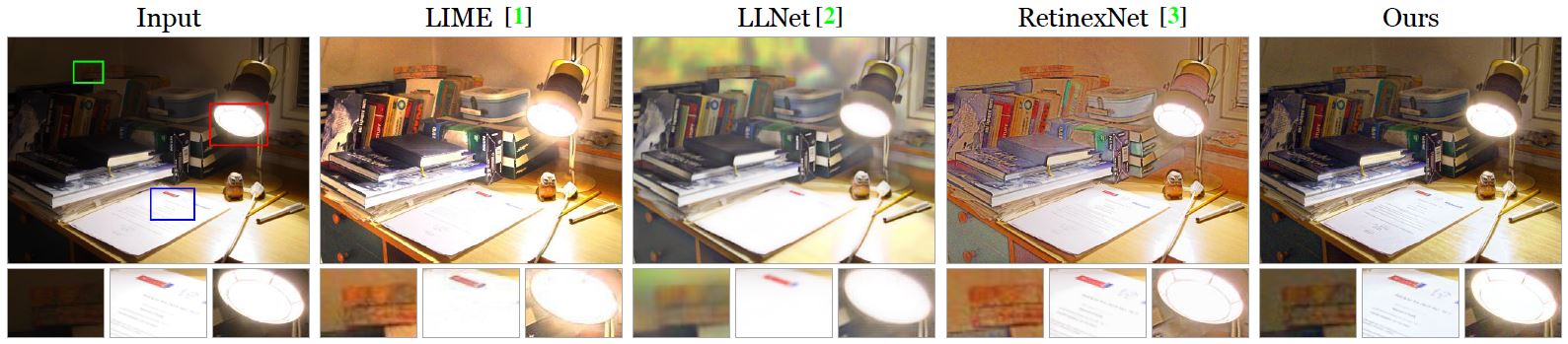

Real Low-light Image Enhancement

We compare our method with state-of-the-art methods on natural low-light images. Our method surpasses other methods in two key aspects. On the one hand, our method can restore vivid and natural color to make the enhancement results more realistic. In contrast, Retinex-based methods (such as RetinexNet and LIME) will cause different degrees of color distortion. On the other hand, our method is able to recover better contrast and more details. This improvement is especially evident when compared with LLNet, BIMEF and MBLLEN.

Download

- • Supplementary Files

- • Synthetic Dataset (Baidu Drive password: os9v)

- • Codes (Coming Soon)

Citation

@article{Lv2019AgLLNet,

title={Attention-guided Low-light Image Enhancement},

author={Feifan Lv, Yu Li and Feng Lu},

journal={arXiv preprint arXiv:1908.00682},

year={2019}

}

Reference

[1] Xuan Dong, Guan Wang, Yi Pang, Weixin Li, Jiangtao Wen, Wei Meng, and Yao Lu. Fast efficient algorithm for enhancement of low lighting video. In IEEE International Conference on Multimedia and Expo, pages 1–6, 2011.

[2] Shuhang Wang, Jin Zheng, Hai Miao Hu, and Bo Li. Naturalness preserved enhancement algorithm for non-uniform illumination images. IEEE Transactions on Image Processing, 22(9):3538–48, 2013.

[3] Xiaojie Guo, Yu Li, and Haibin Ling. Lime: Low-light image enhancement via illumination map estimation. IEEE Transactions on Image Processing, 26(2):982–993, 2017.

[4] Zhenqiang Ying, Ge Li, Yurui Ren, Ronggang Wang and Wenmin Wang. A New Low-Light Image Enhancement Algorithm Using Camera Response Model. IEEE International Conference on Computer Vision Workshop, pages 3015–3022, 2017.

[5] Zhenqiang Ying, Ge Li and Wen Gao. A Bio-Inspired Multi-Exposure Fusion Framework for Low-light Image Enhancement. arXiv:1711.00591, 2017.

[6] Chen Wei*, Wenjing Wang*, Wenhan Yang, Jiaying Liu. Deep Retinex Decomposition for LowLight Enhancement. British Machine Vision Conference (BMVC), 2018.

[7] Feifan Lv, Feng Lu, Jianhua Wu and Chongsoon Lim. MBLLEN: Low-light Image/Video Enhancement Using CNNs. British Machine Vision Conference (BMVC), 2018.