MBLLEN: Low-light Image/Video Enhancement Using CNNs

Abstract

We present a deep learning based method for low-light image enhancement. This problem is challenging due to the difficulty in handling various factors simultaneously including brightness, contrast, artifacts and noise. To address this task, we propose the multi-branch low-light enhancement network (MBLLEN). The key idea is to extract rich features up to different levels, so that we can apply enhancement via multiple subnets and finally produce the output image via multi-branch fusion. In this manner, image quality is improved from different aspects. Through extensive experiments, our proposed MBLLEN is found to outperform the state-of-art techniques by a large margin. We additionally show that our method can be directly extended to handle low-light videos.

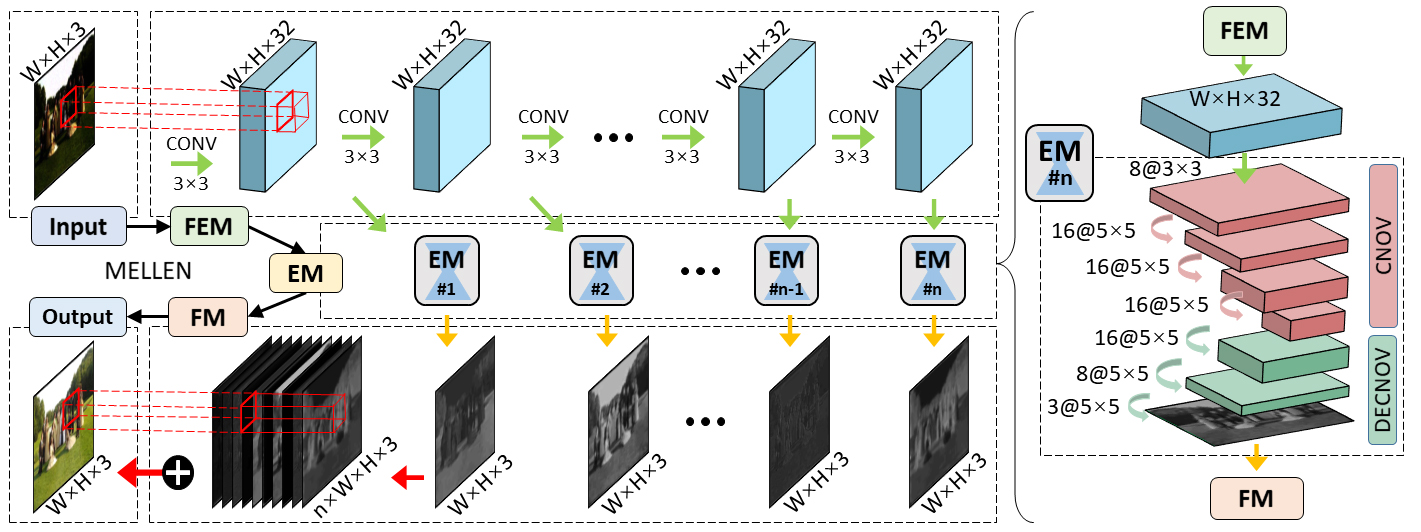

Network Architecture

In this paper, we propose a novel method for low-light image enhancement by taking the success of the latest deep learning technology. At the core of our method is the proposed fully convolutional neural network, namely the multi-branch low-light enhancement network (MBLLEN). The MBLLEN consists of three types of modules, i.e., the feature extraction module (FEM), the enhancement module (EM) and the fusion module (FM). The idea is to learn to 1) extract rich features up to different levels via FEM, 2) enhance the multi-level features respectively via EM and 3) obtain the final output by multi-branch fusion via FM.

Low-light Image Results

We compare our MBLLEN with five state-of-the-art methods, including dehazing based method (Dong) [1], naturalness preserved enhancement algorithm (NPE) [2], illumination map estimation based algorithm (LIME) [3], camera response based algorithm (Ying) [4], and bio-inspired multi-exposure fusion algorithm (BIMEF) [5].

Low-light Video Results

We compare our MBLLEN with its video version (MBLLVEN) and three other state-of-the-art methods, including illumination map estimation based algorithm (LIME) [3], camera response based algorithm (Ying) [4], and bio-inspired multi-exposure fusion algorithm (BIMEF) [5].

Download

- • Supplementary Files

- • Datasets (Baidu Drive Password: ayo0)

- • Codes

Citation

@article{Lv2018MBLLEN,

title={MBLLEN: Low-light Image/Video Enhancement Using CNNs},

author={Feifan Lv, Feng Lu, Jianhua Wu and Chongsoon Lim},

journal={British Machine Vision Conference},

year={2018}

}

Reference

[1] Xuan Dong, Guan Wang, Yi Pang, Weixin Li, Jiangtao Wen, Wei Meng, and Yao Lu. Fast efficient algorithm for enhancement of low lighting video. In IEEE International Conference on Multimedia and Expo, pages 1–6, 2011.

[2] Shuhang Wang, Jin Zheng, Hai Miao Hu, and Bo Li. Naturalness preserved enhancement algorithm for non-uniform illumination images. IEEE Transactions on Image Processing, 22(9):3538–48, 2013.

[3] Xiaojie Guo, Yu Li, and Haibin Ling. Lime: Low-light image enhancement via illumination map estimation. IEEE Transactions on Image Processing, 26(2):982–993, 2017.

[4] Zhenqiang Ying, Ge Li, Yurui Ren, Ronggang Wang and Wenmin Wang. A New Low-Light Image Enhancement Algorithm Using Camera Response Model. IEEE International Conference on Computer Vision Workshop, pages 3015–3022, 2017.

[5] Zhenqiang Ying, Ge Li and Wen Gao. A Bio-Inspired Multi-Exposure Fusion Framework for Low-light Image Enhancement. arXiv:1711.00591, 2017.