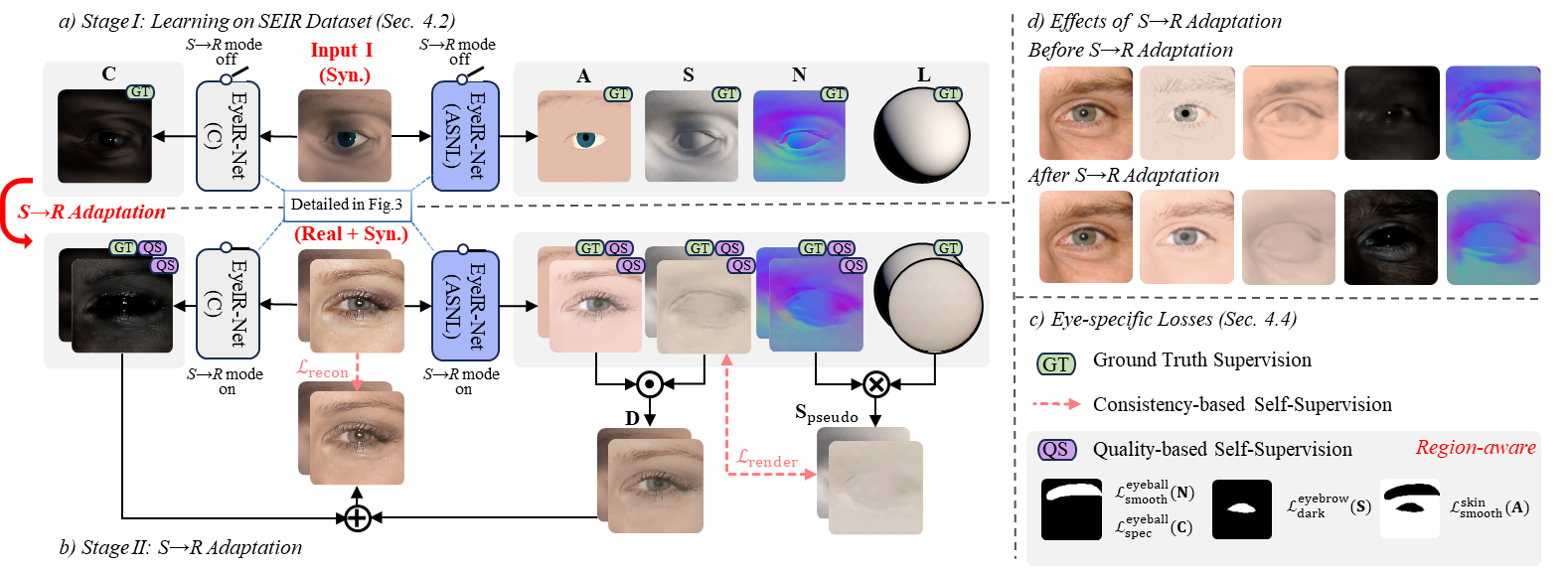

- In Stage I, the EyeIR-Net is trained on SEIR by supervision of ground truths.

- In order to obtain high-quality results on REIR, we propose S→R adaptation: in b) Stage II, the EyeIR-Net is trained simultaneously on SEIR and REIR, leveraging the supervised signals from SEIR to guide the self-supervised learning for REIR.

- We facilitate S→R adaptation by proposing a novel RBDecoder.

- Eye-specific losses c) are designed for the training. Particularly, to handle the eye-specific ambiguities analyzed in Sec. 1, quality-based losses consider different region's properties.

- The effect of our EyeIR framework is shown in d).

Abstract

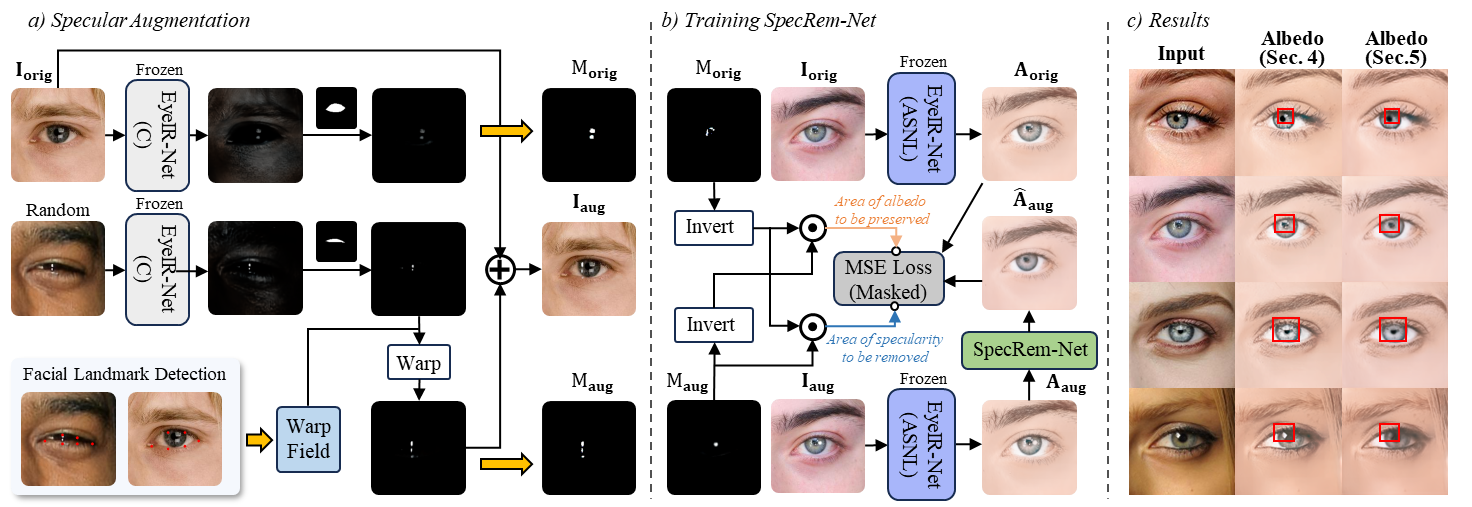

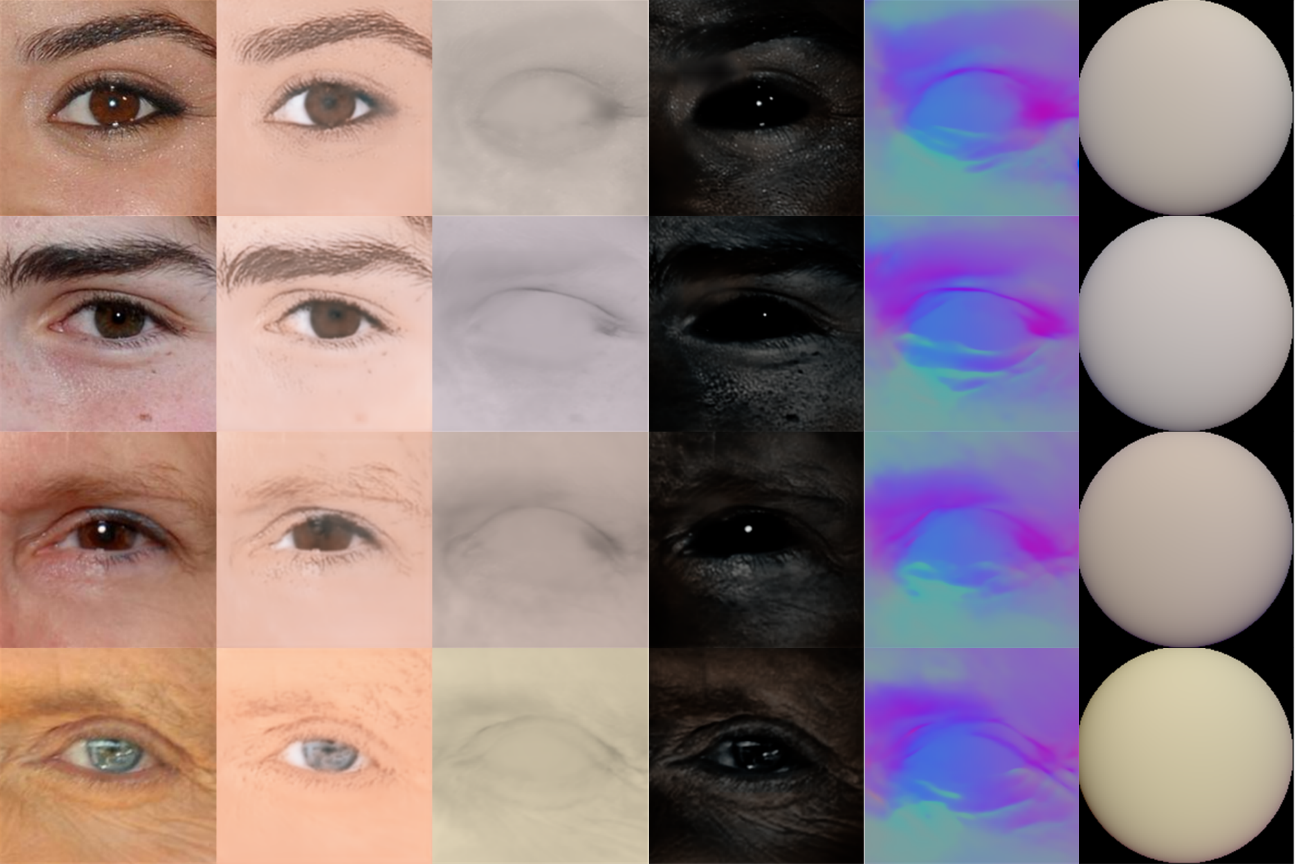

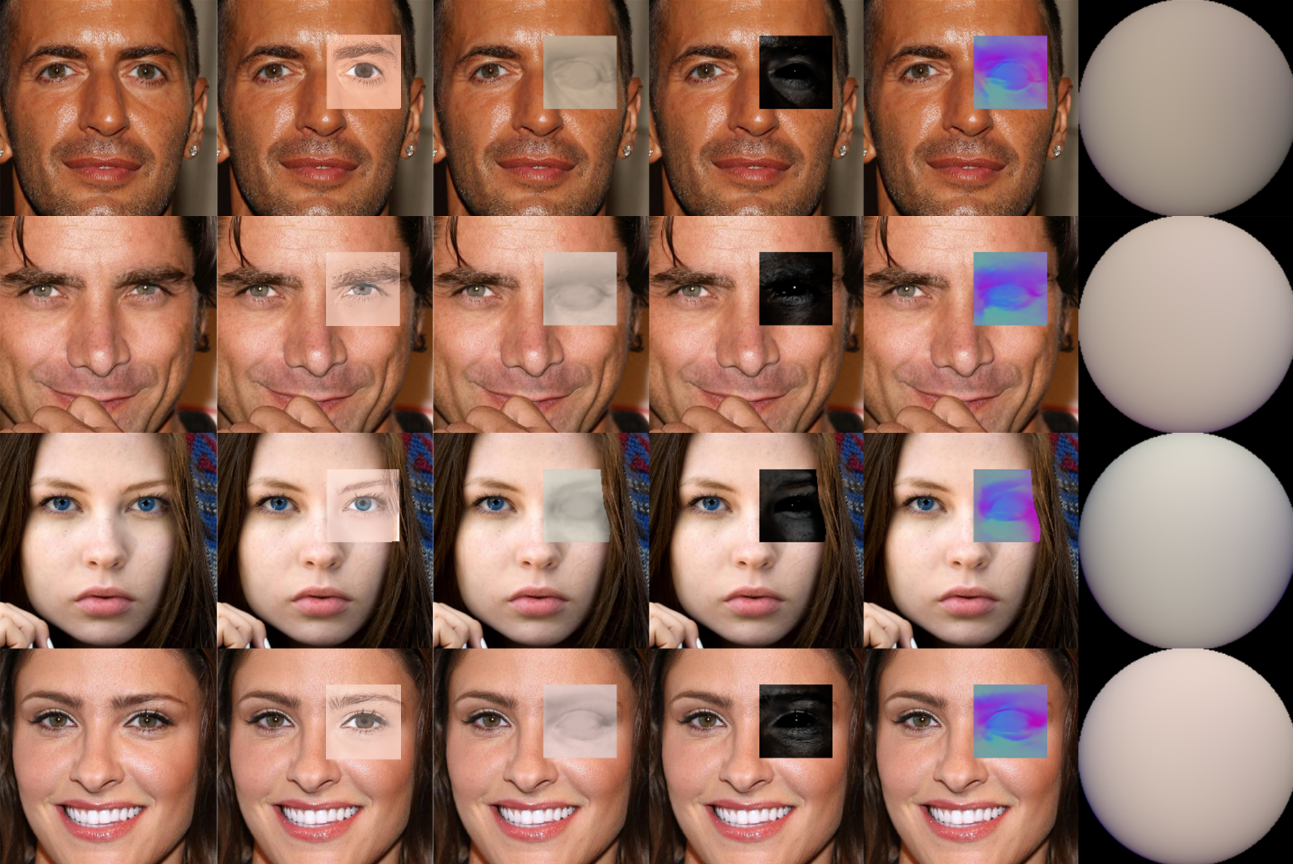

We propose a method to decompose a single eye region image in the wild into albedo, shading, specular, normal and illumination. This inverse rendering problem is particularly challenging due to inherent ambiguities and complex properties of the natural eye region. To address this problem, first we con- struct a synthetic eye region dataset with rich diversity. Then we propose a synthetic to real adaptation framework to leverage the supervision signals from synthetic data to guide the direction of self-supervised learning. We design region-aware self-supervised losses based on image formation and eye region intrinsic properties, which can refine each predicted component by mutual learning and reduce the artifacts caused by ambiguities of natural eye images. Particularly, we address the demanding problem of specularity removal in the eye region. We show high-quality inverse rendering results of our method and demonstrate its use for a number of applications.